The sub-project B06 of the SFB/TRR 318 "Constructing Explainability" investigates the normativity of XAI from an ethical and media science perspective. To date, there has been a lack of research into the normativity of XAI, i.e., among other things, an analysis of the question of what XAI is actually supposed to be good for, because explanations are not an end in themselves. The lack of examination of the question of the purposes of XAI is due to a one-sided definition of the problem. It is crucial for the XAI research field to conceptualise XAI as an answer to the black box problem of AI systems. The lack of transparency and comprehensibility of the systems (black box) is the price to pay for accuracy and efficiency. This conceptualisation of the problem is one-sided in at least two respects: Firstly, opacity does not have to be problematic per se, but it is necessary to ask more precisely for whom, for what purpose, in which contexts an explanation of the black box is or should be useful. Secondly, the various contexts and stakeholders need to be considered and the question asked as to when which transparency or explanation strategy may be appropriate and relevant for whom.

The sub-project contributes to answering these questions in a specific way. In the first phase, our specialist group "Philosophy and Ethics of Scientific and Technological Cultures" initially questioned the overgeneralised valorisation of explainability. Explanations are not to be regarded as positive per se, as they are not always wanted, helpful or necessary. For example, it is not necessary to understand the inner workings of a train in order to commute to work effectively. Inappropriate explanations can annoy dialogue partners and lead to a breakdown in interaction. A detailed explanation of all the steps and mechanisms that make up a jury's decision making in a court case could undermine the authority of the institution. If XAI is to be helpful in real-world applications, the research field must seriously address the question of the relevance of explanations and contextualise them sufficiently.

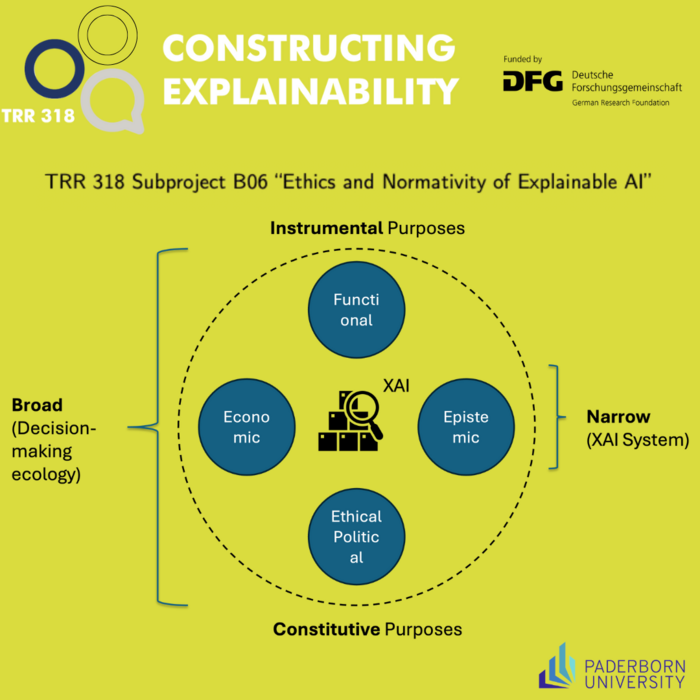

To this end, the researchers have analysed the XAI discourse and created a taxonomy of typical purposes in the research field. These can be categorised into four main categories:

- functional (e.g. optimisation of AI systems),

- economic (e.g. acceptance and usability for users),

- epistemic (e.g. validating scientific findings) and

- ethical-political purposes (e.g. promoting or enabling responsibility, autonomy).

Depending on the context of XAI use, these purpose categories can be instrumental or constitutive in nature.

- The purposes are instrumental if the same purpose can also be achieved by other means,

- the purposes are constitutive if their realisation necessarily depends on explanations (if the purpose of self-determination or assumption of responsibility depends on being able to sufficiently understand the AI systems).

The project's taxonomy makes it clear that the XAI techniques being developed to date do not do justice to the diversity of purposes and contexts in which they are used. In phase two of the project, the research group therefore plans to analyse the relevance of explanations in selected scenarios from the fields of medicine, education and police work in their respective contexts. To this end, they are expanding the value-sensitive design method established in technology ethics to include the dimension of organisational contexts.

More information about the project can be found here.